Decagon Product Foundations

Start learning now

Fill out the form to get started on your AI CX Specialist Certification

Start learning now

Fill out the password to get started on your AI CX Architect Certification (customers only).

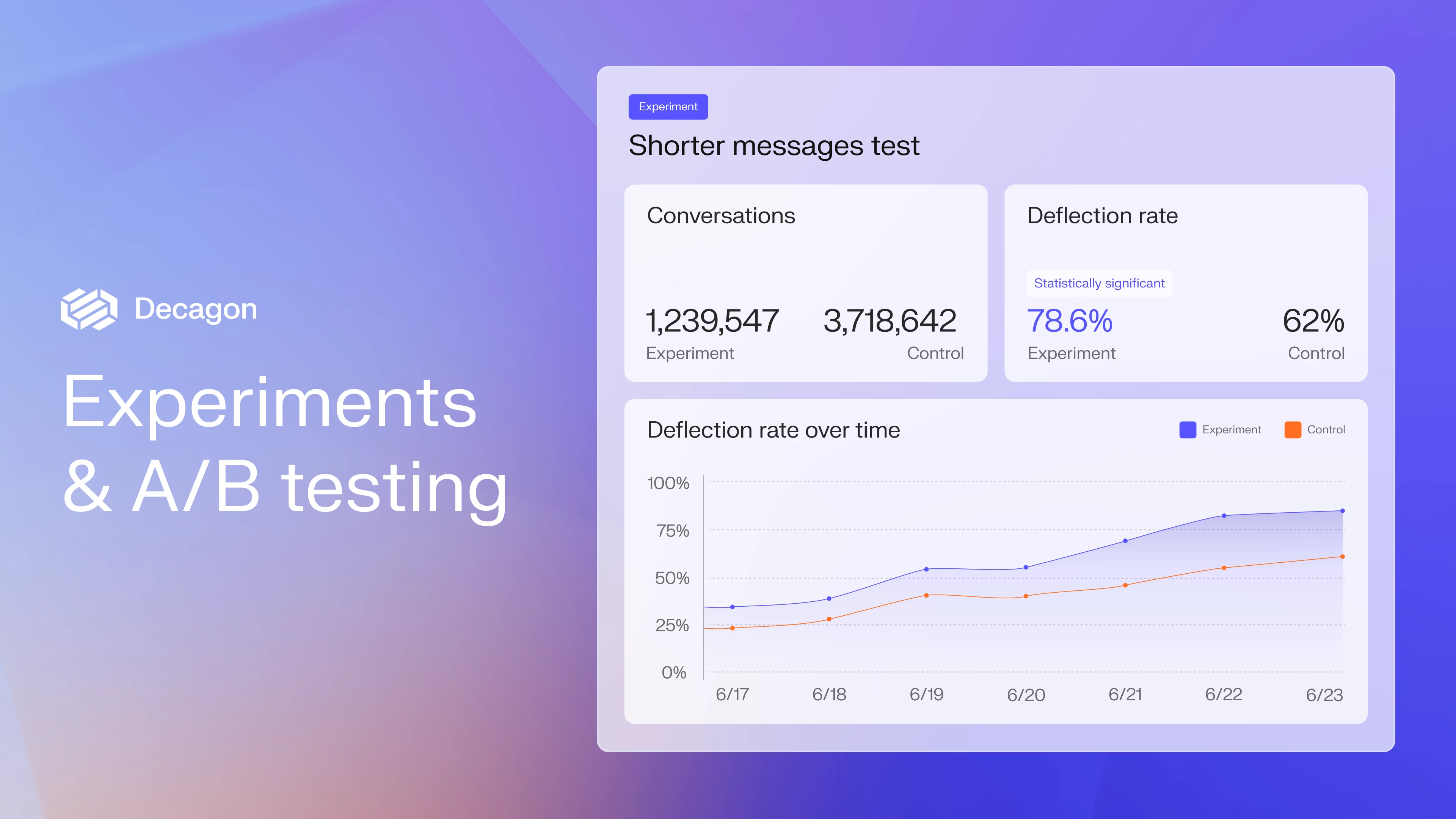

Running experiments and A/B tests

Your AI agent shouldn’t stay frozen in time. Experiments let you test changes safely, measure their impact, and adopt improvements with confidence. Take a scientific approach to continuously improving your Decagon agent.

The process begins with a hypothesis. You can set up an A/B test where the control group continues with the current behavior, while the variable group receives the change. Decagon randomizes traffic between groups and tracks key metrics like CSAT, deflection, and conversation outcomes.

Experiments can start small, with just a percentage of traffic in the variable group, and scale up as confidence grows. Once results are statistically significant and positive, you can roll out the change fully. If the experiment doesn’t show improvement, roll back, review what you learned, and test a new hypothesis.

Decagon’s experimentation suite makes this process approachable for CX teams. Running multiple experiments? Decagon automatically reserves at least 20% of traffic as a control baseline, ensuring clean comparisons.

Best practices include making experimentation a regular rhythm, not a once-a-quarter exercise. Each test, whether it’s a new AOP, an updated escalation path, or a tone adjustment, generates insights about what drives better outcomes for customers. Over time, this iterative approach compounds into higher performance, greater efficiency, and more reliable customer experiences.

In short, experimentation turns AI improvement from guesswork into evidence-based practice.